Probabilistic Predictions with Federated Learning

- Autor:

-

Quelle:

Entropy 2021, 23(1), 41

DOI: 10.3390/e23010041

MDPI, Basel, Switzerland - Datum: 30.12.2020

Abstract

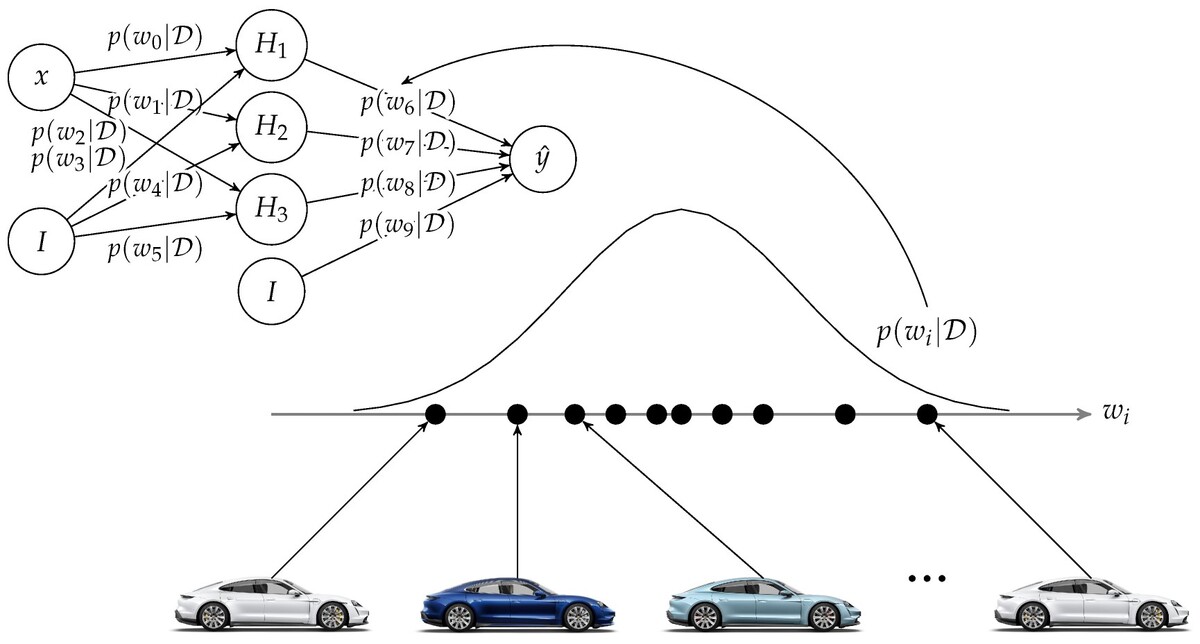

Probabilistic predictions with machine learning are important in many applications. These are commonly done with Bayesian learning algorithms. However, Bayesian learning methods are computationally expensive in comparison with non-Bayesian methods. Furthermore, the data used to train these algorithms are often distributed over a large group of end devices. Federated learning can be applied in this setting in a communication-efficient and privacy-preserving manner but does not include predictive uncertainty. To represent predictive uncertainty in federated learning, our suggestion is to introduce uncertainty in the aggregation step of the algorithm by treating the set of local weights as a posterior distribution for the weights of the global model. We compare our approach to state-of-the-art Bayesian and non-Bayesian probabilistic learning algorithms. By applying proper scoring rules to evaluate the predictive distributions, we show that our approach can achieve similar performance as the benchmark would achieve in a non-distributed setting.